kubernetes-ELK日志功能

Kubernetes ELK日志

ELK = Elasticsearch + Log-pilot + Kibana搭建日志收集功能

环境情况

- CentOS 7 x64

- Docker-ce 19.03.2

- Kubernetes v1.15.1

- Kubernetes 3 * Node ( 2 Master 1 Node )

文档地址

Log-pilot使用文档: https://github.com/AliyunContainerService/log-pilot/blob/master/docs/fluentd/docs.md

Log-pilot的K8s部署文档: https://www.alibabacloud.com/help/doc-detail/86552.html?spm=a2c5t.11065259.1996646101.searchclickresult.52c73384xdUKpz

Elasticsearch+Kibana的K8S的Yaml:https://github.com/kubernetes/kubernetes/tree/release-1.15/cluster/addons/fluentd-elasticsearch

注意点

- 目前log-pilot:0.9.7-filebeat的版本是不支持Elasticsearch 7.x的

- quay.io/fluentd_elasticsearch/elasticsearch 的image国内环境可以下载(也就是1.16及以上的都不需要修改image)

- gcr.io/fluentd-elasticsearch/elasticsearch 需要代理或者dockerhub中搜elasticsearch:版本号

Elasticsearch部署

1. 创建pv&pvc

如果是使用其他的存储或者是自动创建的storageClass则无视这个操作

elasticsearch-logging-volume.yaml1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68apiVersion: v1

kind: PersistentVolume

metadata:

name: elasticsearch-pv-0

spec:

#指定pv的容量为1Gi

capacity:

storage: 1Gi

#指定访问模式

accessModes:

#pv能以readwrite模式mount到单个节点

- ReadWriteMany

#指定pv的回收策略,即pvc资源释放后的事件.recycle(不建议,使用动态供给代替)删除pvc的所有文件

persistentVolumeReclaimPolicy: Delete

#指定pv的class为nfs,相当于为pv分类,pvc将指定class申请pv

storageClassName: logging

#指定pv为nfs服务器上对应的目录

nfs:

path: /data/nfs

server: 10.0.0.201

# 创建pvc

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

namespace: kube-system # 与StatefulSet所在的namespace一致

name: elasticsearch-logging-elasticsearch-logging-0 # ${StatefulSet.volumeMounts.name}-${StatefulSet.metadata.name}.${pod.num}

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 1Gi # 请根据实际情况设定

storageClassName: logging

apiVersion: v1

kind: PersistentVolume

metadata:

name: elasticsearch-pv-1

spec:

#指定pv的容量为1Gi

capacity:

storage: 1Gi # 请根据实际情况设定

#指定访问模式

accessModes:

#pv能以readwrite模式mount到单个节点

- ReadWriteMany

#指定pv的回收策略,即pvc资源释放后的事件.recycle(不建议,使用动态供给代替)删除pvc的所有文件

persistentVolumeReclaimPolicy: Delete

#指定pv的class为nfs,相当于为pv分类,pvc将指定class申请pv

storageClassName: logging

#指定pv为nfs服务器上对应的目录

nfs:

path: /data/nfs

server: 10.0.0.201

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

namespace: kube-system # 与StatefulSet所在的namespace一致

name: elasticsearch-logging-elasticsearch-logging-1 # ${StatefulSet.volumeMounts.name}-${StatefulSet.metadata.name}.${pod.num}

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 1Gi # 请根据实际情况设定

storageClassName: logging

2. 部署Elasticsearch

2.1 下载配置文件

1 | wget https://raw.githubusercontent.com/kubernetes/kubernetes/release-1.15/cluster/addons/fluentd-elasticsearch/es-statefulset.yaml |

2.2 修改配置文件(es-statefulset.yaml)

1 | # RBAC authn and authz |

2.3 创建服务

1 | # 部署statefulset |

3. 部署 Log-pilot

部署过程中有问题可以到任意主机的/var/log/filebeat下查看日志

log-pilot.yaml1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93apiVersion: extensions/v1beta1

kind: DaemonSet

metadata:

name: log-pilot

labels:

app: log-pilot

# 设置期望部署的namespace

namespace: kube-system

spec:

updateStrategy:

type: RollingUpdate

template:

metadata:

labels:

app: log-pilot

annotations:

scheduler.alpha.kubernetes.io/critical-pod: ''

spec:

# 是否允许部署到Master节点上

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

serviceAccountName: elasticsearch-logging

containers:

- name: log-pilot

# 版本请参考https://github.com/AliyunContainerService/log-pilot/releases

image: registry.cn-hangzhou.aliyuncs.com/acs/log-pilot:0.9.7-filebeat

resources:

limits:

memory: 500Mi

requests:

cpu: 200m

memory: 200Mi

env:

- name: "NODE_NAME"

valueFrom:

fieldRef:

fieldPath: spec.nodeName

- name: "LOGGING_OUTPUT"

value: "elasticsearch"

# 请确保集群到ES网络可达

- name: "ELASTICSEARCH_HOSTS"

value: "elasticsearch-logging:9200" # {es_endpoint}:{es_port}

# 配置ES访问权限

#- name: "ELASTICSEARCH_USER"

# value: "{es_username}"

#- name: "ELASTICSEARCH_PASSWORD"

# value: "{es_password}"

volumeMounts:

- name: sock

mountPath: /var/run/docker.sock

- name: root

mountPath: /host

readOnly: true

- name: varlib

mountPath: /var/lib/filebeat

- name: varlog

mountPath: /var/log/filebeat

- name: localtime

mountPath: /etc/localtime

readOnly: true

livenessProbe:

failureThreshold: 3

exec:

command:

- /pilot/healthz

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 2

securityContext:

capabilities:

add:

- SYS_ADMIN

terminationGracePeriodSeconds: 30 # pod关闭最大容忍时间(秒)

volumes:

- name: sock

hostPath:

path: /var/run/docker.sock

- name: root

hostPath:

path: /

- name: varlib

hostPath:

path: /var/lib/filebeat

type: DirectoryOrCreate

- name: varlog

hostPath:

path: /var/log/filebeat

type: DirectoryOrCreate

- name: localtime

hostPath:

path: /etc/localtime创建服务

1

2

3kubectl apply -f log-pilot.yaml

# 查看服务部署情况

kubectl get pods -n kube-system -o wide |grep log-pilot

4. 部署 Kibana

4.1 下载配置文件

1 | wget https://raw.githubusercontent.com/kubernetes/kubernetes/release-1.15/cluster/addons/fluentd-elasticsearch/kibana-deployment.yaml |

4.2 修改配置文件(kibana-deployment.yaml)

2

3

4

SERVER_BASEPATH 这个变量要注释掉

如果我们使用 kubectl proxy 访问,就不要注释

并且添加一个 CLUSTER_NAME

1 | apiVersion: apps/v1 |

4.3 创建ingress配置文件

kibana-ingress.yaml

1 | apiVersion: extensions/v1beta1 |

4.4 创建服务

1 | kubectl apply -f kibana-deployment.yaml |

5. 测试demo

tomcat-log.yaml1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61#deploy

apiVersion: apps/v1

kind: Deployment

metadata:

name: web-demo

spec:

selector:

matchLabels:

app: web-demo

replicas: 3

template:

metadata:

labels:

app: web-demo

spec:

containers:

- name: web-demo

image: tomcat:8-slim

ports:

- containerPort: 8080

env:

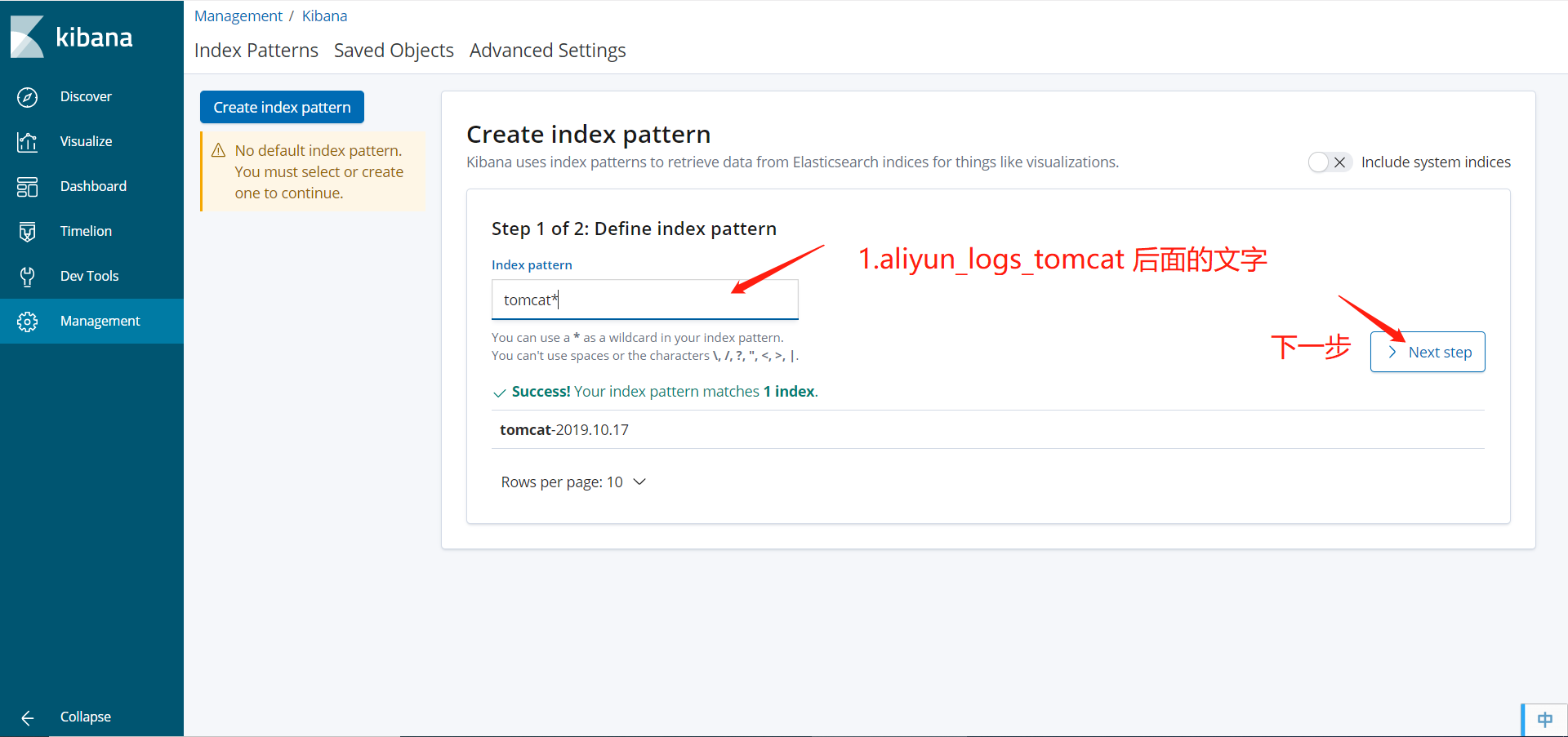

- name: aliyun_logs_tomcat # 提取命令行日志

value: "stdout"

- name: aliyun_logs_access # 提取指定位置的日志文件

value: "/usr/local/tomcat/logs/*"

volumeMounts:

- mountPath: /usr/local/tomcat/logs # 容器内文件日志路径需要配置emptyDir

name: accesslogs

volumes:

- name: accesslogs

emptyDir: {}

#service

apiVersion: v1

kind: Service

metadata:

name: web-demo

spec:

ports:

- port: 80

protocol: TCP

targetPort: 8080

selector:

app: web-demo

type: ClusterIP

#ingress

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: web-demo

spec:

rules:

- host: web.chc.com

http:

paths:

- path: /

backend:

serviceName: web-demo

servicePort: 80查看es索引创建情况

1

2

3

4

5

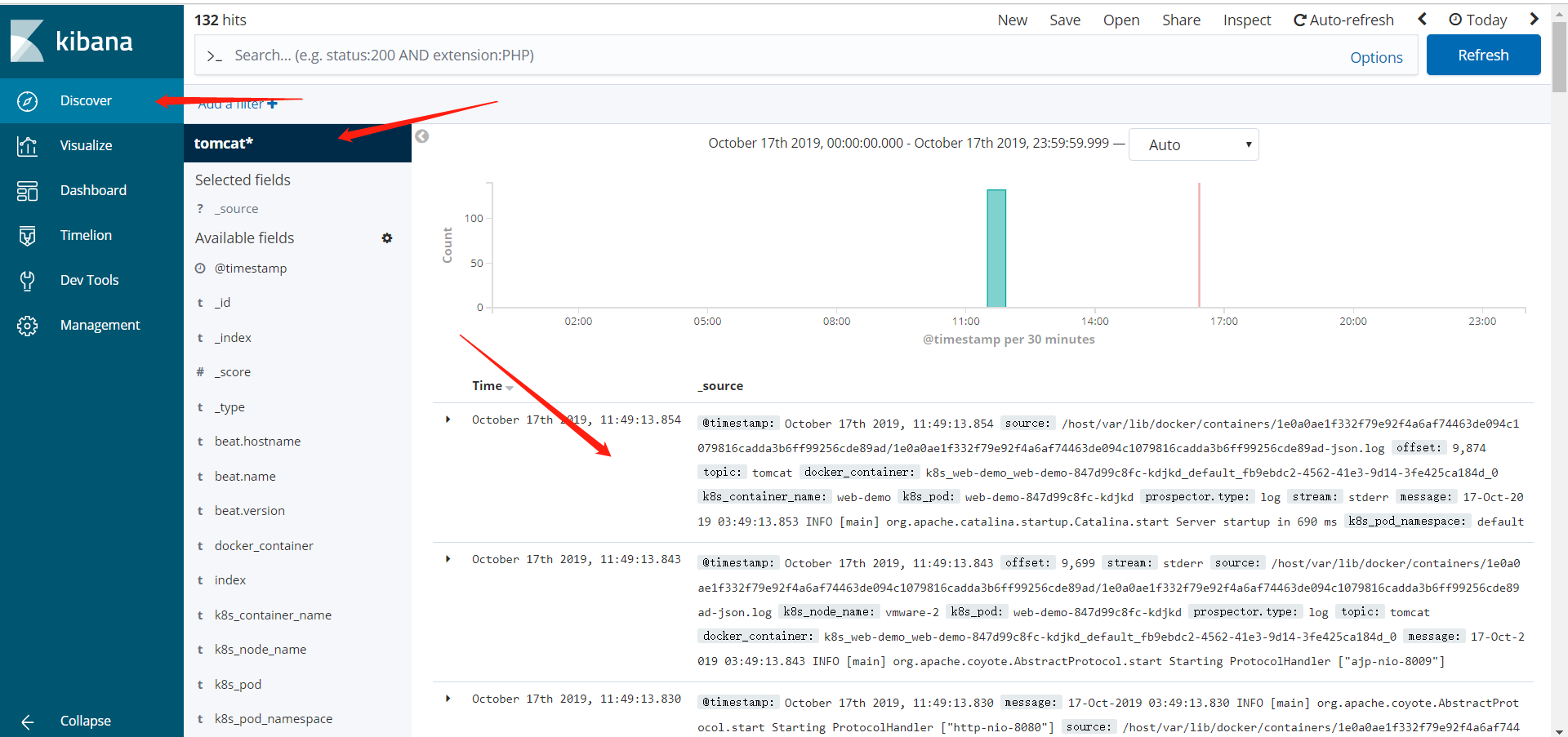

6[root@vmware-1 ~]# curl 10.101.151.108:9200/_cat/indices?v

health status index uuid pri rep docs.count docs.deleted store.size pri.store.size

green open access-2019.10.17 eMvfmntrSPybJNXEYeAm0Q 5 1 158 0 868.6kb 453.2kb

green open tomcat-2019.10.17 zV3ZDjy5TL-Ajg8MSlXepA 5 1 132 0 560.4kb 280.9kb

green open .kibana_1 NrYxSm59RWKbov2bKRexfg 1 1 3 0 34.4kb 17.2kb

[root@vmware-1 ~]#

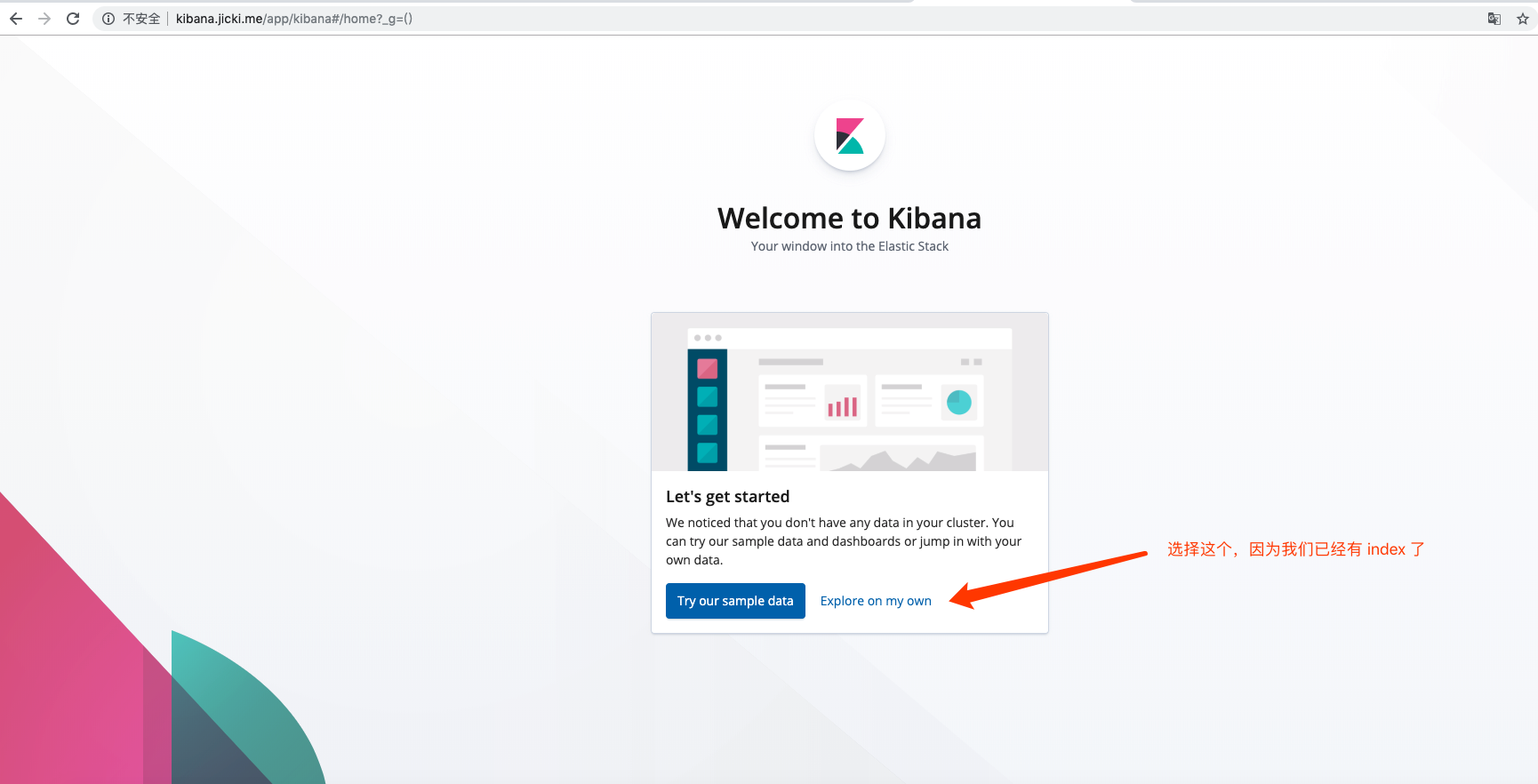

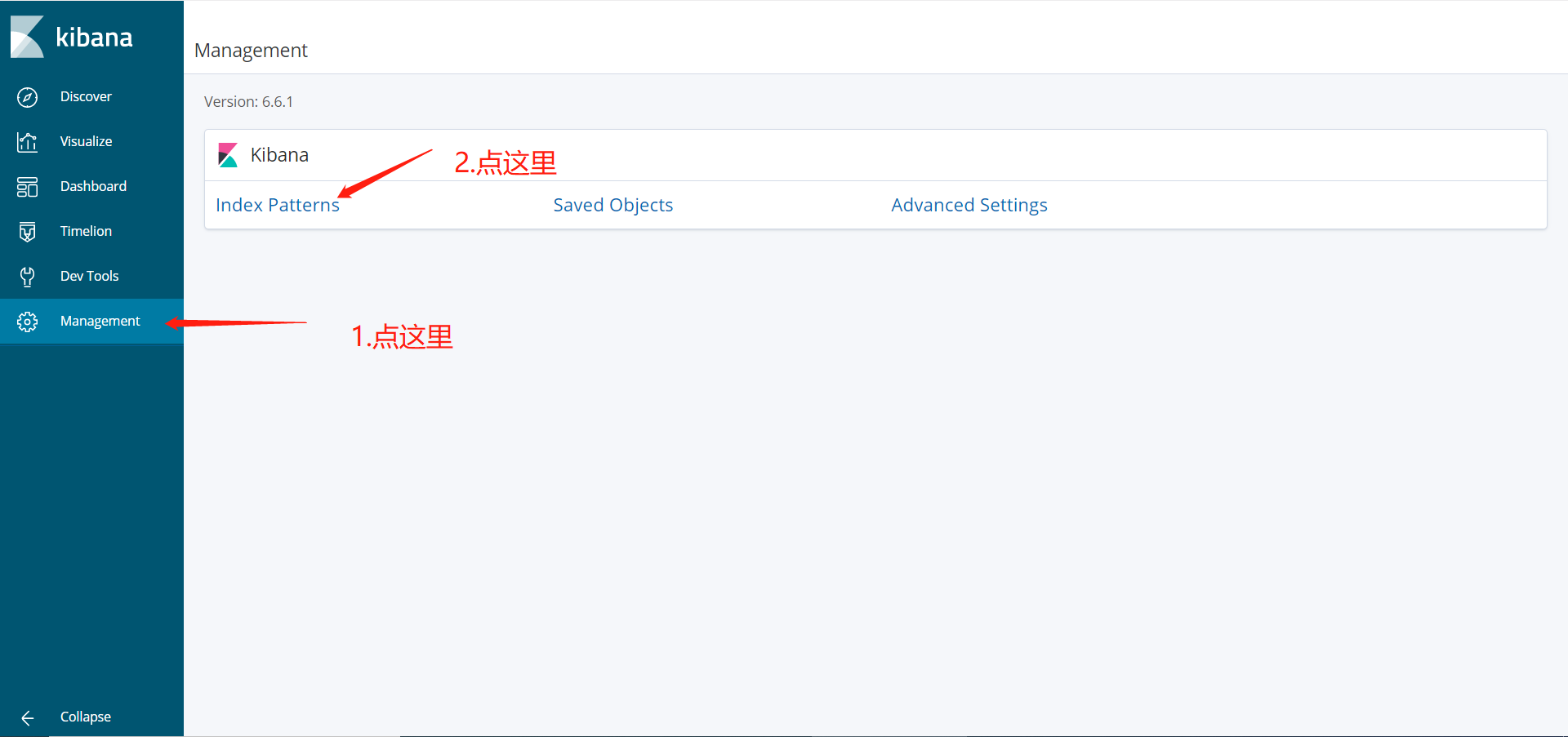

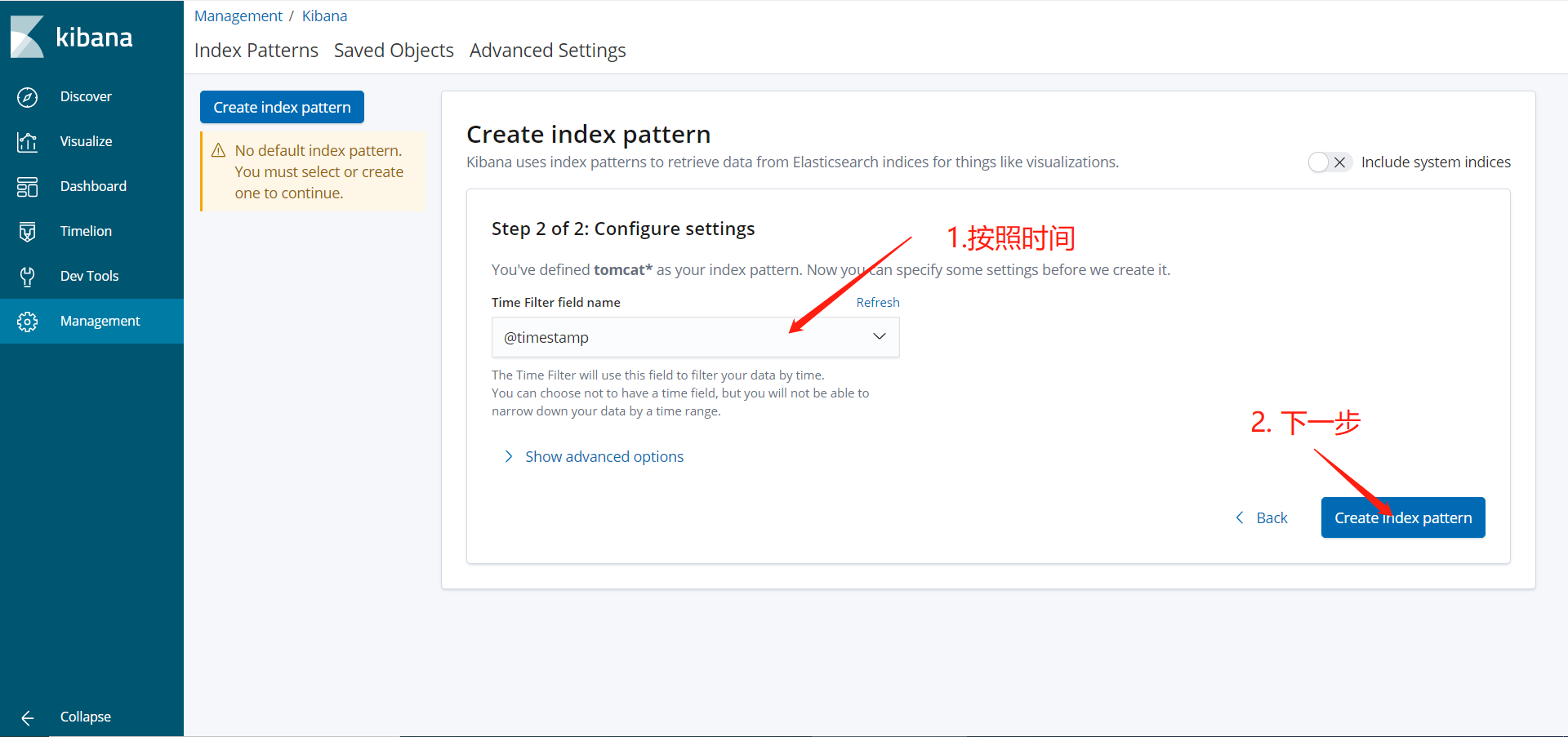

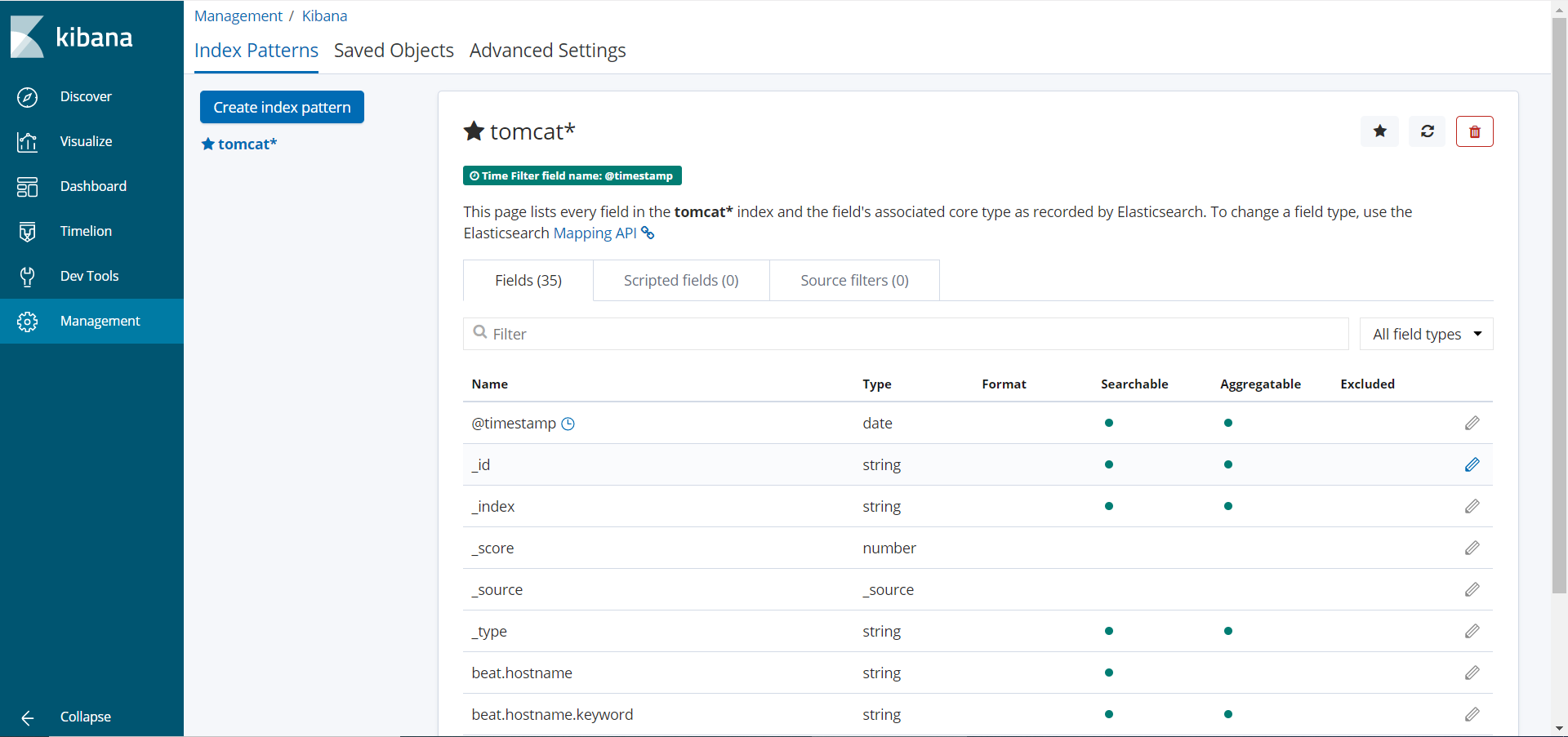

5.1 配置日志

本博客所有文章除特别声明外,均采用 CC BY-NC-SA 4.0 许可协议。转载请注明来自 Chc-个人数据程序主页!